Transformer NLP & Machine Learning: size does count, but size isn’t everything!

Our second post on machine learning looks at how the Transformer model revolutionized Natural Language Processing.

In the last post we looked at how the impressive natural language processing performance of today’s neural networks was due in part to the sheer size of the models and training corpuses being used.

Nevertheless, ‘brute force’ computing power and deep learning were not enough to fully address the complexity of natural language. In particular, models needed to be smarter about capturing the critical dependencies between words in different parts of the sentence.

A key step in this direction was the announcement by Google in 2017 of A Novel Neural Network Architecture for Language Understanding. It presented a new approach to natural language processing called Transformer.

Transformer NLP explained

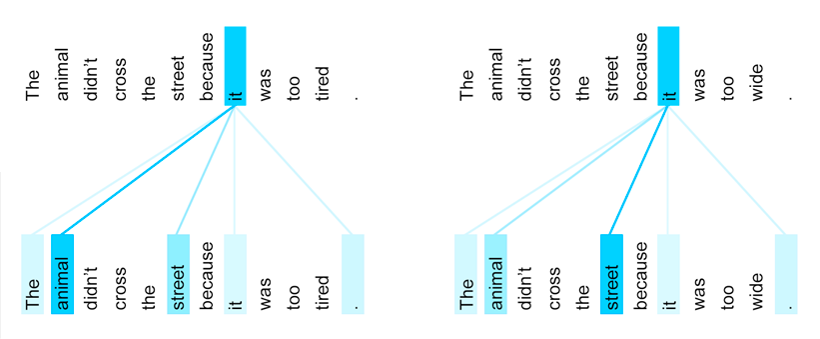

Consider these sentences:

- The animal didn’t cross the street because it was too tired.

- The animal didn’t cross the street because it was too wide.

In the first sentence it refers to animal, but in the second sentence it refers to street.

Natural language is full of these ambiguities and they create serious difficulties for automated language tasks like translation or answering questions.

For example, in translating the sentences into French, the word it requires different renderings in each sentence to agree with the gender of the subject:

Transformer NLP: Paying ‘attention’

The Transformer NLP model introduced an ‘attention’ mechanism that takes into account the relationship between all the words in the sentence. It creates differential weightings indicating which other elements in the sentence are most critical to the interpretation of a problem word. In this way ambiguous elements can be resolved quickly and efficiently.

Diagram from: Transformer: A Novel Neural Network Architecture for Language Understanding

Transformer networks pre-trained with this approach are able to provide top performance in standard NLP benchmarks. Compared to the sequential models that had gone before, they deliver better results while making more efficient use of the available processing power. Transformer architecture also allows the model to take advantage of the powerful parallel processing routines available in the GPUs increasingly used for NLP training applications.

Next: putting Transformer NLP to work

The arrival of the Transformer model has pushed performance on language tasks to unprecedented levels. Some of the more spectacular results have received a good deal of publicity in recent months and I’ll be looking at some of these in a future article. But, from a practical point of view, it’s the model’s excellent performance on tasks like summarization, classification and semantic search which are of obvious immediate benefit. In my next post I’ll be looking at how we have explored the integration of some of these functions into Eidosmedia platforms & decoupled CMS (they have the potential to provide valuable tools for writers and editors, digital newsroom softwares, CMSs for banks and other applications).